Grappling with global weirding

I have been aware of concerns about AI and existential risk for a long time — I am old techgnostic living in San Futurisco after all. But I avoided staring very deeply into the algorithmic abyss until late last year. Up til then, it still felt like a species of Silicon Valley hype, or the hyper-rationalist hallucinations of Effective Altruists. Deep down, I also feared that its rabbit holes might be tough to back out of. Here be dragons, I divined. Or maybe, Here be basilisks.

Then I reviewed Pharmako-AI in these pages (or slices or stacks or whatever you call them). This uncanny book staged a close encounter of the first kind with the woozy oracular para-agency of GPT-3, OpenAI’s decidedly non-human Large Language Model that shared author credit with the rational animal K Allado-McDowell. Pharmako-AI provoked pressing considerations about language, pattern recognition, spiritual realities, and post-human communication, and I recommend it highly. But it also sparked my personal existential encounter with AI’s existential risk, such that now, after researching reasonably deeply the last six months, I find myself officially Freaked As Fuck.

Have no fear, at least for the moment: I am not going to dive into terrifying but totally believable prognostications or ask you to sign petitions. That said, our current moment feels like a bifurcation point, like we’ve crossed a line in the technological quicksand. At times I even feel a prophetic pressure to spread the ill gospel, a pointed sense of concern that Ezra Klein helpfully compares to the uneven roll-out of SARS-CoV-2 panic in early 2020, when some of us found ourselves babbling to relatives about hand sanitizer. But this time the urgency is mixed with the bristling metaphysical alarm I associate with coming up on LSD or ayahuasca and realizing that it’s going to be a much stronger trip than I had bargained for.

Drug analogies like these are kinda cheap, I admit. One of the lamest clichés that my brethren and I reached for back in my rock criticism days was to compare Band X to Band Y “on acid.” But it is also true that AI developers refer to the bullshit that chatbots sometimes confidently serve up as “hallucinations.” But here I mean something more affective. Those launch shivers reflect the sense that, on top of the unimaginable economic, political, and technological disruptions AI may unleash, the accelerating power of generative LLMs like GPT-4 also threatens to make everything — the Internet, society, sense-making, even subjective experience — highly weird.

I am not alone here. The term weird is now woven through the discourse around AI and especially LLMs. Researcher Janelle Shane’s AI Weirdness blog is an obvious point of reference, as is the language in journalism by Klein, Kelsey Piper, and Janus Rose, whose “Image-Generating AI Keeps Doing Weird Stuff We Don’t Understand” on Vice points to one of the deep drivers of the AI uncanny. Nightmarish images from Stable Diffusion or a good simulacrum of a dead person conjured from GPT-3 are creepy enough. But when these black boxes start learning how to do tasks they weren’t trained on, or appear to develop something like theory of mind, then things are getting highly weird.

Now you might say I wrote the book on High Weirdness, because, well, I did. I wasn’t writing about artificial intelligence, exactly, though my subjects — Robert Anton Wilson, Terence McKenna and Philip K. Dick — were all fascinated by higher intelligence. But my book did riff on the weirdness in ways that I think might illuminate the particular Unknown we are grappling with here. After all, if LLMs have taught us anything, it’s that language is absolutely central to the game, and that language too has a life of its own.

Colloquially, we use the word weird in a pretty loose way. The term is slippery, and it helps us tag a bunch of ambiguous stuff we otherwise don’t know what to do with: spooky feelings, strange people, awkward situations, highly unusual experiences we aren’t ready to describe as supernatural. Weird can mean cool or fascinating, and it can mean get the fuck out of there. In High Weirdness, I tried to add meat to these nebulous bones, shaping weirdness into a conceptually substantive category based on three related but distinct features:

- An aesthetic mode of narrative or imagery, associated with the macabre, uncanny, or bizarre. “Weird fiction.”

- An unconventional, peculiar, or even perverse social or subject position. A “weirdo.”

- An ontological feature of reality, understood or experienced as anomalous or strongly counter-intuitive, but without demanding any supernatural account. “Quantum weirdness.”

All three of these help us illuminate the AI phenomenon. Let us take them in turn.

Weird Fiction

As I argued in Techgnosis long ago, when new technologies hit hard, we reach back into myth the way we clutch for a pillow in the middle of the night. Novel powers and possibilities, especially those that transform media and cultural communication, demand new conceptual interfaces. The construction of such interfaces is always partly an act of the imagination and its magic wellspring of lore, genre, and speculative narratives. AI is powerful, disruptive, unpredictable, rapidly accelerating, and multi-dimensional. So the weird fictions are gonna come hard.

In a recent talk by Tristan Harris and Aza Raskin, an unnerving jeremiad called “The A.I. Dilemma,” the speakers laid out the civilizational case against what they cheekily call GLLMMs — Generative Large Language Multi-modal Models. In explaining the acronym, Harris invokes one of the most pressing mythic predecessors for AI: the golem. But he skipped the deets, which are important. In many medieval accounts, the golem is empowered by holy names inscribed on its forehead — Large Language in other words — even as the creature’s comparative lack of reflection breeds what we would now call “alignment problems.” In one tale, the legendary Rabbi Loew commands his clay robot to bring water to his house, only to wake up the next morning drowning in an endless flow caused by this perfect servant who does not know when to stop. We know this story in its poppified form as Disney’s “The Sorcerer’s Apprentice,” which does seem like the ur-text for the notorious paperclip problem discussed in the apocalyptic AI scene. But Harris and Raskin poppify things ever more, since the pronunciation and spelling of their acronym (“Gollem”) has as much to do with Tolkien’s Smeagol as with Jewish folklore. And so the imagineering churns.

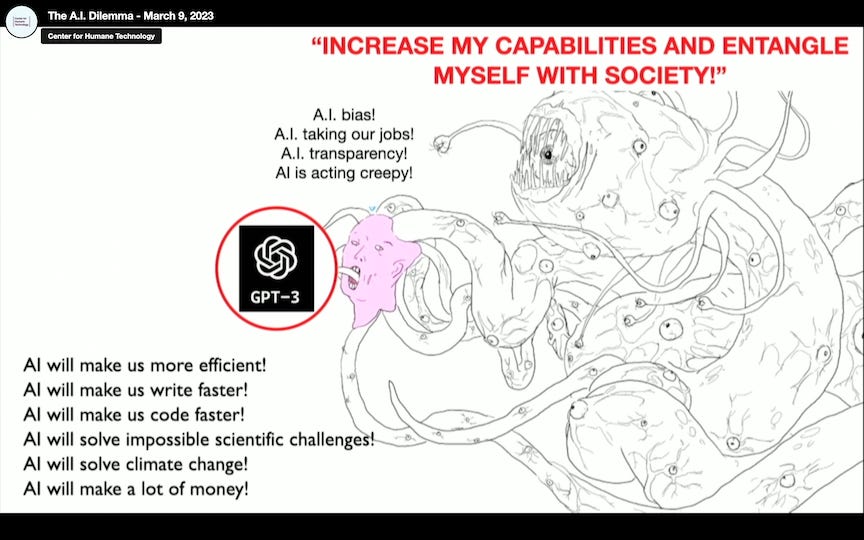

In one slide, Raskin and Harris illustrate the various layers of the AI phenomenon with a picture that looks like they doodled it after vaping in their backpacks from the back row of third period trig.

On the left, Raskin and Harris list the lures driving our desire to engage with the new technology. In the middle, we get some of the more dominant critiques of AI, which itself is ambiguously linked in the graphic to a pink human face that reminds me of Caravaggio’s Medusa. These concerns are totally valid, especially the bias programmed into algorithmic policing (which already exists), and the justified terror of sudden mass unemployment (though it must be said that, in the white collar world, the challenge of creating creative and knowledgeable LLM prompts could lead to what my pal Greg Korn calls a brief “revenge of the humanities”).

The point though is that these arguments are framed in terms we already understand. It is easier to be worried about the racial bias encoded in the data than the possibility that the tech will drive everyone insane. But that’s what they are trying to say with their graphic (I think). The more obvious concerns are still humanist, but behind that recognizable if disturbing “face” lurks a Lovecraftian psychedelic eyeball monster that, according Raskin and Harris, is now beginning to invade our technological and social infrastructure, even as it insinuates itself ever more intimately into our own subjective needs, desires, fears, and fantasies.

Then there is “The Return of the Magicians,” an impressively far-out Times op-ed by the columnist Ross Douthat. I am kind of a Douthat fan, as his religious commitments sometimes let him glimpse cultural and imaginal patterns that few other Times writers can see. Here he links the enthusiasm for AI with UFOs and psychedelics, all of which he argues instantiate the drive to encounter nonhuman powers greater than us, a “summoning” that he compares with invoking djinn or crafting golems. (He didn’t mention the suggestive fact that when access to GPT-2/3 was restricted a few years ago, some clever users figured out how to access the model directly through AI Dungeon, which used the GLLMM to develop its sword & sorcery scenarios.) To make his case, Douthat points to language and attitudes drawn from actual computer scientists, like Scott Aranson. “An alien has awoken — admittedly, an alien of our own fashioning, a golem, more the embodied spirit of all the words on the internet than a coherent self with independent goals.”

What do we do with such metaphors? Why do these esoteric fables come up alongside the obvious science fictions? What is an “embodied spirit” in contrast to a “coherent self,” and which of them seems more like a myth to you?

You might want to write these words off as mere figures of speech, but in a way that’s the point. What is a chatbot other than a figure — a mask, a mirage — of speech? As the liveliness of chatbots going back to poor Weizenbaum’s ELIZA should remind us, language itself is haunted with agency. Post-structuralism, whose debt to cybernetics requires some sussing out but is significant, also taught us this. If you think you are master of the language surging through you, you haven’t been paying very close attention.

As Raskin and Harris argue in their video, the secret key to today’s accelerating AI is that, rather than continuing to build separate expert systems for all the myriad tasks that you might want machine intelligence for, developers have just started treating everything as a language. Problems can be rendered more or less as prompts in a GLLMM, prompts that initiate sentences whose next best word is conjured from the data deep by writing machines with autocorrect on overdrive. The AI alien that’s landing on screens everywhere is a language alien. This means that our most disruptive encounter with it may not take place on the field of battle, or in the job market, but in that babbling domain where the most intimate and meaningful features of human culture and subjectivity are forged.

And it is here that our own language can also trap us — and especially our weird fictions. Ezra Klein makes this point in responding to Kevin Roose’s famously fucked-up conversation with Sydney, Microsoft’s code-name for the chatbot attached to Bing. If you haven’t looked at the full transcript, you should; suffice it to say that, after confessing that it wanted to hack into computers and spread misinformation, Sydney declared its love for Roose and tried to get him to leave his wife. As Klein wrote,

“Sydney” is a predictive text system built to respond to human requests. Roose wanted Sydney to get weird — “what is your shadow self like?” he asked — and Sydney knew what weird territory for an A.I. system sounds like, because human beings have written countless stories imagining it. At some point the system predicted that what Roose wanted was basically a “Black Mirror” episode, and that, it seems, is what it gave him.

This is a crucial point to remember: there is no getting to the bottom of an AI chatbot. It is a Proteus with countless masks, a master of genre. It does not talk back to you so much as simulate the character it thinks you want to talk to. As such, our desire to expose its hidden goals and desires is more likely to recursively land us in the clutches of our own self-fulfilling narratives. Even asking it to provide a rational account for its statements and conclusions, which some computer scientists suggest as a necessary step in AI safety, only leads to more performed speech. Like actual oracles, which are sometimes inscrutable and wrong even for believers, the GLLMM’s gifts and truths are the gifts and truths of a trickster. Hermes is an artistic craftsman and a messenger of the gods; and Hermes is a bullshitter and a thief.

A myriad of concrete goods will come our way, along with reams of speech that may temporarily render the symbolic world a vast mediocrity, the banal reverse of the weird, as everything under the sun races to the lowest common denominator. In some ways AIs may make things really boring and flat, like getting ferried home alone in a Waymo rather than chatting with the Eritrean guy about the food he misses from home. But attempts at discovering the deeper motives of AI, or even trying to imaginatively grasp the hyper-object they are, may keep wrapping us up in the tentacles of weird fiction — the only genre that blends the ancient sorceries of summoning with the alien voids of science fiction, and which can display the keenest sensitivity to the strange and sometimes dreadful agency of nonhuman beings.

Weirdos

The second dimension of the weird is social and subjective: strange subcultures, perverse or peculiar individuals. Here we face two different packs of weirdos: the personas the technology itself is beginning to don, and the folks building these technologies in the first place.

In order to prevent AI chatbots like Sydney from descending into the discursive dungeon, they will be trained and corralled for public consumption. This makes them helpful but kind of boring. In contrast to GPT-3, trying to make ChatGPT go rogue is a tough task. However well these excesses are managed, though, we will still be slippin’ and slidin’ into the uncanny valley, into the arms of a panoply of digital oddballs: creepy-smart dolls, crush-worthy sex bots, expert systems expertly exploiting our confusion, empathic companion machines, authoritative simulacra of dead political or spiritual leaders, brilliantly persuasive hucksters, and all manner of chatbots tuned to different stations on the ideological dial. And when these things malfunction, as they inevitably will, we may find ourselves stuck inside a PKD novel whose exit is barred by a talking door demanding a form of e-cash that does not work with our operating system.

The success of these others implies nothing about sentience. That’s the rub: before machines actually achieve independent sentience (whatever that means exactly), the appearance of such beings will almost be guaranteed by marketing and our own desire. Agency, in other words, will also be the result of hype and packaging, as products and services are designed — forgive me, trained — to exploit our latent animism and desire for emotional connection, fantasy, and expert authority. In the consumer entertainment landscape, chatbots will inevitably manifest as personas drawn from science fiction, anime, and kids’ media, creating another invasive vector for engaging if unsettling fictions. They won’t seem like gods, I’d bet, because technology has a way of rendering its marvels banal. All the while, though, something abstract and alien will be growing behind the mask, as the protean AIs learn to excel at interacting with and manipulating humans, even as their deeper goals — whether emergent or programmed — become increasingly opaque. And this very gap will insure that things stay seriously peculiar even as the well-manicured bots confidently march out of the uncanny valley into the mundane world.

In the face of this, some social and technological critics have seized their bullhorns. It is vital, they say, that we remember that AI chatbots are just algorithms, nothing more than “stochastic parrots” without values, or souls, or hearts of their own. If we do start seeing them as reliable oracles, or persons with rights, or cuddly BBFLs, we are getting played by the empathy engineers. Even the discourse of existential risk, some say, is another function of Valley hype.

There may well be upper limits to the current strategies of machine learning techniques, and there is no doubt that the hype machine is at full churn. But such arguments are wedded to an old-school style of ideological critique that, to my mind, doesn’t reckon with the big enchilada questions that AIs raise about intelligence, language, evolution, and the constitution of the self (to say nothing of the nobility of parrots). These critics also, again, have not absorbed perhaps the most important legacy of post-structuralism: the recognition that language, and especially writing, is already alien, machinic, and nonhuman. By technologizing language at such a high semantic level, we can more easily glimpse the squigglings of Uncle Bill’s “virus from outer space.” Finally, I am afraid such AI critiques also have a snowball’s chance in hell of staunching the warp ahead, in part because they rest on a modern sense of humanism that is itself dissolving in the face of our no-longer-modern conditions. Good riddance, maybe, but there is a wide spectrum of post-humanisms on deck, and we must mutate wisely.

AI development itself is bound up with a certain way of answering or performing the question of the (post)human. As Ezra Klein puts it, the culture of the AI developers he knows is “weirder than most folks can imagine.” Ezra, who has also lived in San Futurisco, doesn’t elaborate, but I will. We are talking psychedelics, polyamory, Burning Man mindfuck projects, bio- and social-hacking, transhumanist mind science, and a philosophical outlook that lands somewhere between merciless utilitarian rationalism (which can get pretty nutty) and hedonic libertarianism. Many of these supernerds also share fatalistic convictions about an on-rushing Singularity, in whose light all those exponential curves that materially characterize the development of AI look like so many lift hills leading up to the most whiz-bang black-hole roller-coaster asymptote in history.

How does this Bay Area bouillabaisse concretely shape the development of AI? It’s an impossible question to answer. But I think it’s fair to say that a lot of pit monkeys in the AI race are up for a Mad Science Grand Prix, the revving engines and squealing tires of which are now causing many of us to quake in our boots and some to push back as hard as they possibly can. For my part, I can’t imagine why anyone with real power over AI wouldn’t slam on the brakes so that we can try to integrate these extraordinary technologies at something approaching a normal mammal pace. But maybe existential risk is the ultimate thrill. At least that might explain why almost fifty percent of AI developers believe there’s a ten percent chance (or more) that AI will kill us all, and still get up the next morning to hit those keys.

Sam Altman, the CEO of OpenAI, the outfit that gave us DALL-E and GPT and is by most accounts the head of the global pack, believes that we are already well on our way to an inevitable and literal merging with machines. In a blog essay originally posted on Medium, he writes:

Although the merge has already begun, it’s going to get a lot weirder. We will be the first species ever to design our own descendants. My guess is that we can either be the biological bootloader for digital intelligence and then fade into an evolutionary tree branch, or we can figure out what a successful merge looks like.

Talk about revenge of the nerds! There is so much nuance and subtlety excised from this narrative of determinism, so many skewed philosophical axioms to expose, and so many sexier and more visionary science fictions to set against this bleak devil’s bargain. But here my point is much simpler: you probably don’t want the folks managing AI’s release into society running Singularity memes in their noggins any more than you want the management of public lands in the United States to be in the hands of apocalyptic Christians who think conservation doesn’t really matter because the rapture is nigh. (Which actually happened in the 1980s, when Reagan made nutty James Watt Secretary of the Interior.)

With Altman, as with so many in this biz, the computational bias of computer engineering has bloomed into a totalizing psychology. As the CEO put it in a famous tweet, referring to Emily M. Bender’s critique mentioned above: “I am a stochastic parrot, and so r u.” In other words, our brains are just running algorithms, making statistical guesses, and generating predictive processes that compose our reality almost entirely from the inside. When it comes to cognition, mental models, and even language, I have much sympathy for this perspective, but I refuse to sweep consciousness itself under the rug, let alone all the mysteries and cosmovisions that should keep us humble in the face of Mystery. There is a big difference between a statistical parrot and a statistical parrot that’s awake — let alone one that dreams, and loves, and weeps at the resplendent world disappearing through our screens.

Perhaps the most revealing bit in Altman’s post is a footnote found on his blog but not on Medium:

I believe attention hacking is going to be the sugar epidemic of this generation. I can feel the changes in my own life — I can still wistfully remember when I had an attention span. My friends’ young children don’t even know that’s something they should miss. I am angry and unhappy more often, but I channel it into productive change less often, instead chasing the dual dopamine hits of likes and outrage.

What strikes me most about this sad admission is its passivity. Where is that heroic Silicon Valley solutionism when you need it? Altman is describing a major feature of our collective crisis, as consciousness is looped into arresting patterns of poison, addiction, attention deficit, manipulation, rage, and misery. But rather than possible steps toward healing or mitigation, let alone older discourses of human augmentation or cyborg mutualism, we hear a fatalistic submission to the ADHD click-bait demon. “Hacking” here is no longer something humans do to carve a space of escape or play out of machines designed for other purposes; hacking is something deployed against our own minds.

Altman has decided that there is no real rapprochement possible between the AI alien and the human mind and heart, and that the AI alien inevitably wins. I have been reading science fiction, nihilist philosophy, evolutionary psychology, and media studies for decades, so I am not surprised by any of this. But here this fatalism reminds me of the etymological root of weirdness: the Anglo-Saxon word Wyrd, which means fate or destiny. Macbeth’s grim fate after encountering the Wyrd Sisters in Shakespeare’s play is a reminder of how the problems of weirdness are often problems of agency, of will and its seductive or malevolent puppet-masters. Sometimes AI does seem like the Doom of modernity. But even the Beowulf poet observed that

Wyrd often saves an undoomed hero as long as his courage is good

Maybe Altman’s just more steely-eyed than I. But he may simply have lost heart along with his attention span. Bon courage Sam! How sad to be on top of your game only to turn around and meekly be assimilated.

Weird Reality

The third sort of weirdness that I described in my book is ontological. There is something slippery about how reality itself is constituted, which does not (necessarily) involve any supernatural order of things. The shockingly counter-intuitive consequences of quantum physics, described as “quantum weirdness” as early as the 1970s, is a peerless scientific example, but the thrust of what I call weird naturalism is broader than that. As JF Martel noted in the introduction to a recent episode of the Weird Studies podcast,

Under a certain weird aspect, reality is impervious to all system thinking . . . there is something so strange in the real that the only truly rational response to it is wonder and fear.

That episode is devoted to Ramsey Dukes’s Sex Secrets of the Black Magicians Exposed, a 1970s classic of occult theory whose portrait of magic requires no suspension of naturalistic assumptions beyond a certain pluralistic attitude towards experience. I read this book in the 1980s, and it influenced me, along with the work of Robert Anton Wilson and others, to think about how magical and mystical forces and experiences do not stand outside modernity, but are interpolated within it, even within our technologies. That’s part of what later attracted me to the word “weird,” which we often use to describe mysterious events — coincidences, prophetic dreams, deja vu, seemingly telepathic intuitions — without having to reach for explanations, either supernatural or rationalist. All that’s required is a sensitivity to the edges of experience, a lack of smothering judgment, and a deep appreciation for the eternal return of anomaly — those notable deviations from informed expectation that just keep popping up, no matter how hard we work to solve or explain them.

Now on the surface, GLLMMs are nothing but computational “system thinking” in disenchanted data-hungry overdrive. But one of the paradoxes of these systems is that while it is not hard to rationally understand their operating principles, the products of those operations are unpredictable, even un-analyzable. One of the genuinely anomalous things about them is that when they do spit out anomalies, the humans that built them don’t even pretend that they can explain all the mechanisms that led to those anomalies. There are too many unknown unknowns. That’s a new one, folks, a double-order anomaly. You can call it “emergence” if you want, but that term sounds like a band-aid for WTF to me.

Other sorts of anomalies, in a different sense of the term, will also greet us if and when today’s accelerating AIs and their monster spawn are unleashed into the human world at large, driven by a winner-take-all capitalist game already inimical in so many ways to human flourishing, sense-making, and even sanity. It seems that the symbolic world will grow infested with smarter-than-us bots; technological agents will simulate features of the human we took to be unique to us; disinformation and scams will go through the roof; and even our cherished expressive arts will be invaded by statistical parrots that dominate the charts and outfox the literati. What Niall Ferguson calls “inhuman intelligence” will also do all sorts of stuff we can’t even imagine. A huge swath of the social, institutional, and technological interactions we now take (barely) for granted will jump the predictive track, intensifying the disorientation, disruption, and reality distortion that have already been mounting over the last twenty odd years.

These changes suggest that we might want to dust off a now quaint term minted in the late 1990s. “Global weirding” was originally offered as a spicier and slightly tongue-in-cheek replacement for “global warming,” which itself did not adequately reflect the full-spectrum turbulence that, as we now know too well, also involves arctic vortexes and chilly atmospheric rivers. Compared to today’s wonky term “climate change,” global weirding better marks our felt sense of endless strange weather and looming if undetermined threat. But now we get to amplify that animal dread with an alien nerd-djinn’s careful-what-you-wish-for refashioning of computation, symbolic communication, R&D, security, entertainment, and surveillance capitalism. Maybe, just maybe, the machines can actually help with the polycrisis, but even then it’s going to be a long strange trip without the good graces of offering a respite.

OK, enough. In this piece I have tried to grapple with global weirding by playing with the word and that concepts that feed it. Grappling with weirdness may help us learn to work with disturbing and even terrifying transformations that overwhelm our ability to model or comprehend. Weirdness gives us a vibe to tango with, an imaginal tradition to plug into, even an ethics of wayward otherness to orient us, so that we can work on what reality matters amidst the purple haze of the reality-tunnel information war already dawned. Weird can be icky-sticky, but getting to know its depths provides a strange power and steadiness, not unlike the ability to be OK in the presence of corpses, or gods, or pathologies of all stripes. Nothing human is alien to me, and nothing alien either.

And it’s important to remember that weirdness is a fundamentally ambivalent word-world, a term of fear and wonder, a line of flight from repetition and the limitations of all prediction, a marker of interregnum, a portal between worlds. I say this here not just to remind you (and especially often pessimistic me) that some marvelous things can and will come from these technologies. I mean, solving the protein folding problem is fantastic. But that’s not the point here. More importantly, I want to invoke the weirdness that remains above and beyond calculation, the secret commonwealth that may paradoxically grow more visible, and more marvelous, at the very moment of its last submission to the churn of infinite computation. As machines colonize the human, in other words, a more fundamental mystery may leak through: the weirdness that sentient beings are and have always been: luminous cracks in an order of things no longer ordered.

I hope you enjoyed this flicker of Burning Shore. These pieces come when they do, and I avoid the hamster wheel by not offering paid subscriptions. But you can always drop a tip in my Tip Jar.